The Team:

- Meet Palan

- Rob Nashed

- Clay Tercek

- Woo Song

About The Project

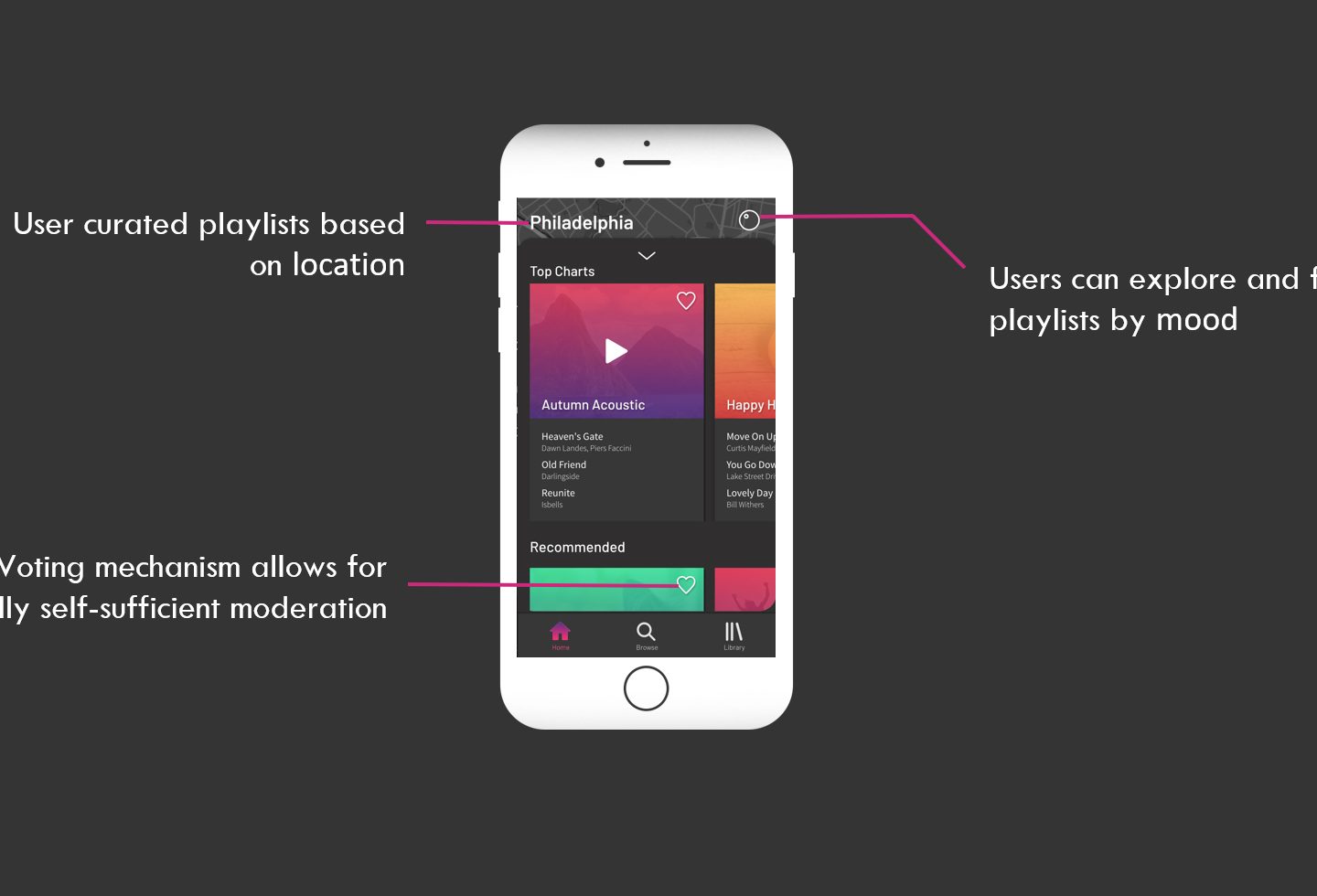

Resonate is an app that helps people explore and share new music playlists from around the world associated with different moods (emotions and feelings) and activities. Users can “heart” a playlist to boost it’s ranking on the charts.

Problem: There is no real way to discover new music based on moods, activity or even location.

The solution: We created Resonate which helps users discover newly recommended songs based on moods, activity, and location preferences.

Timeline: 10 weeks

Roles: Meet Palan – UX, Rob Nashed – UI, Clay Tercek – PM, Woo Song – DEV

Understanding the Project

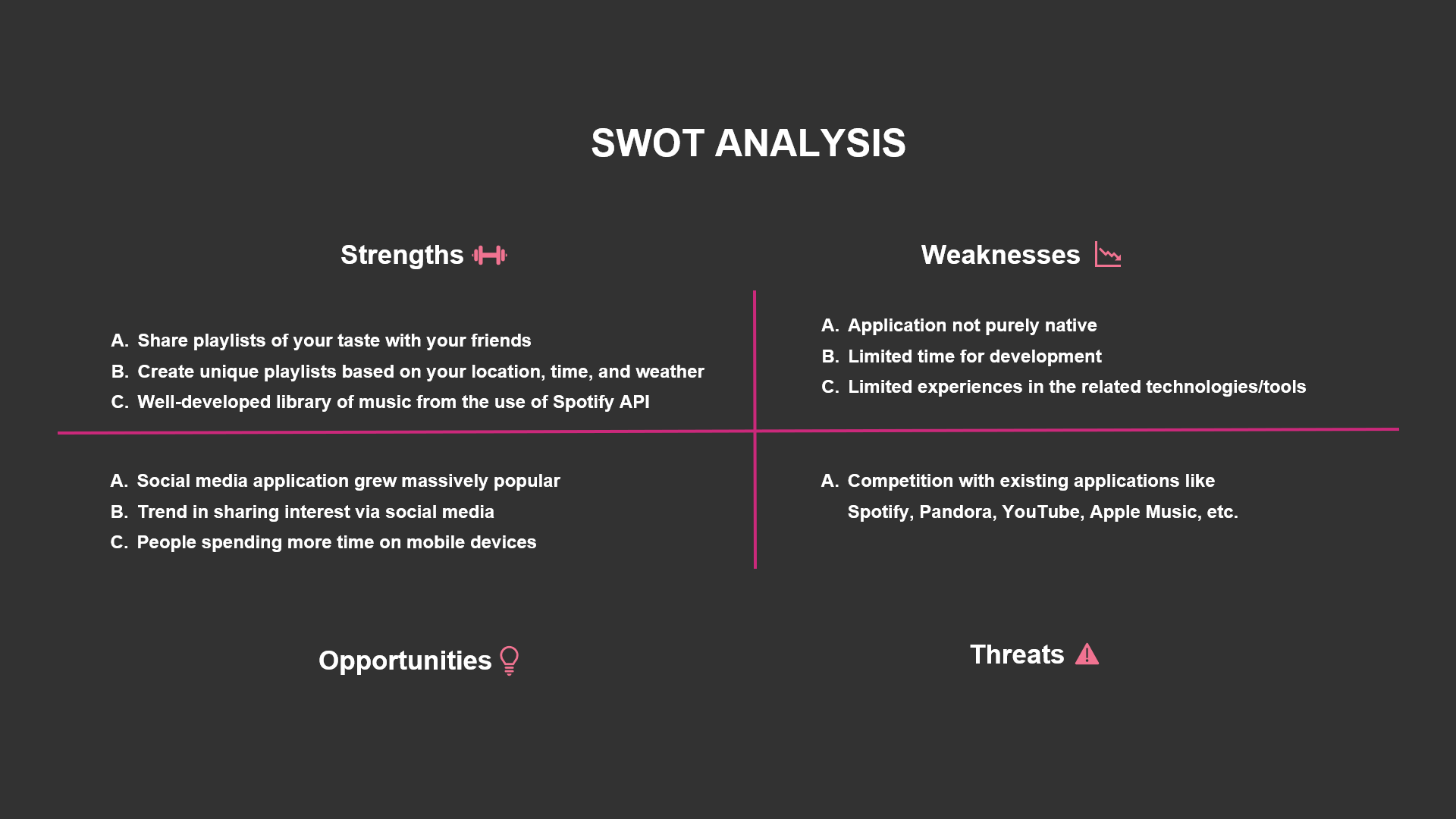

SWOT Analysis Exercise

We started out by listing our projects strengths, weaknesses, opportunities, and threats in order to understand the possibilities and the limitations

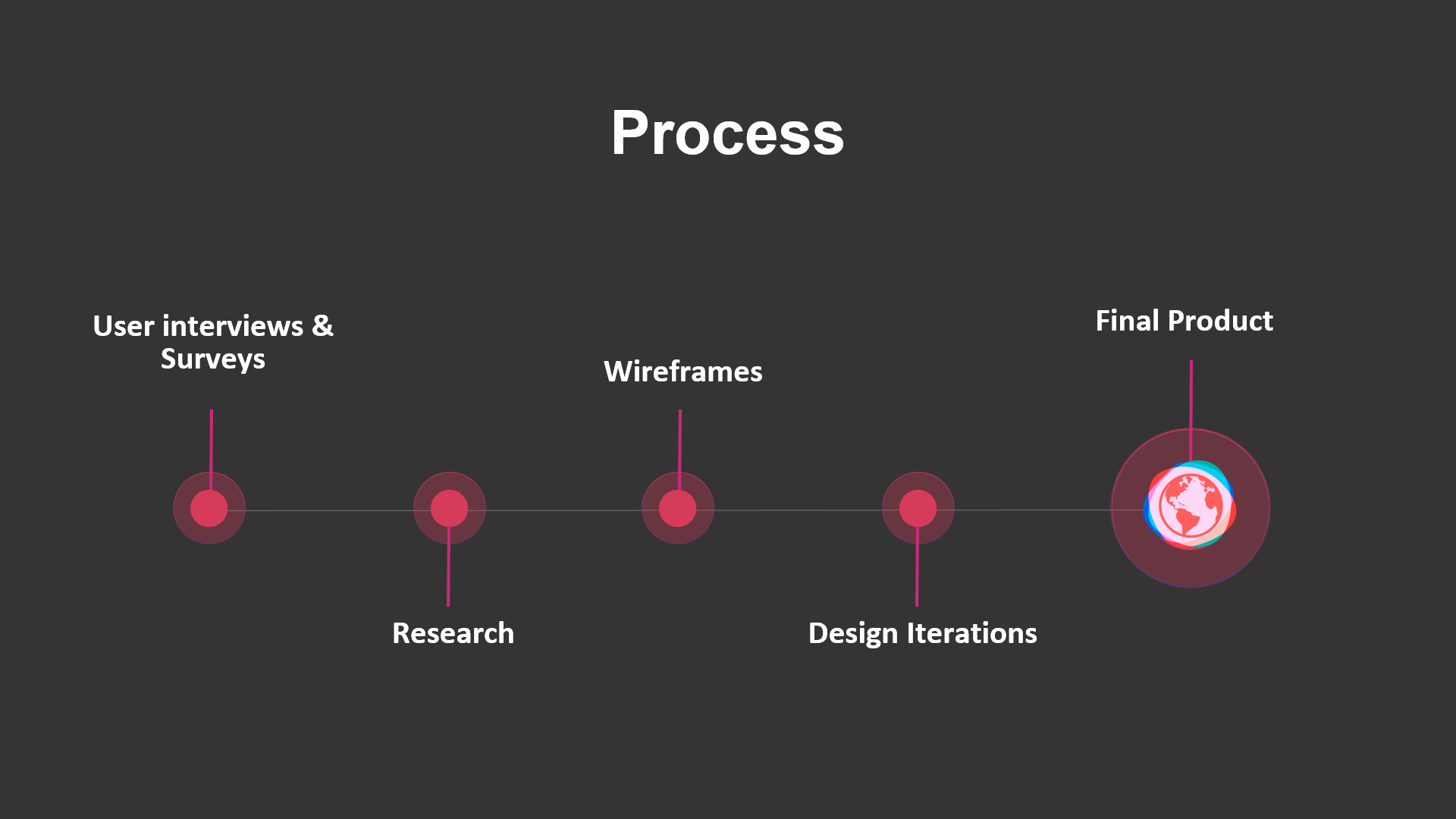

Our Process

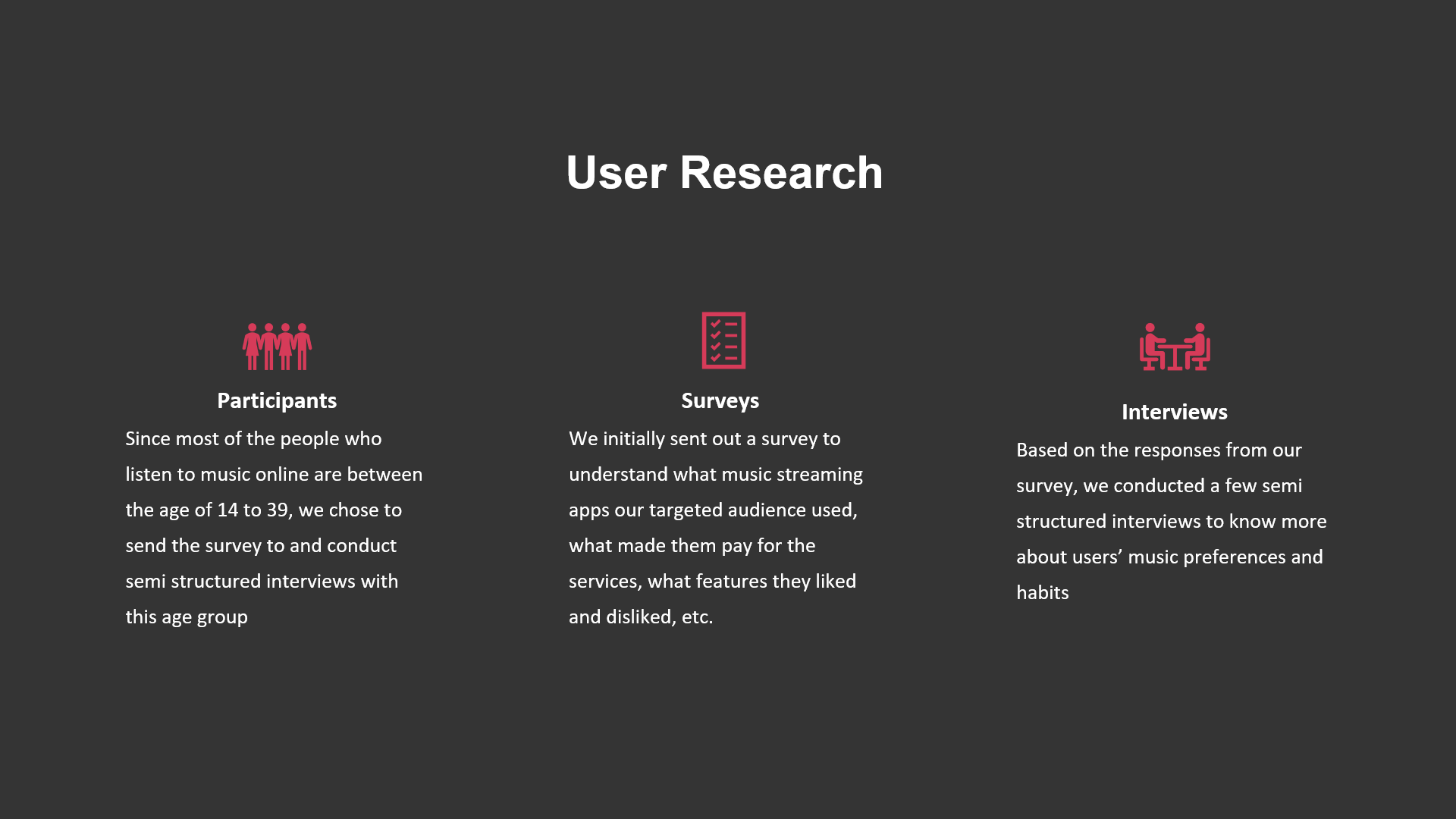

We mainly divided our whole process into three main categories: User Research, Ideation, and Design/Prototype. Our user research was further divided into surveys, and semi structured informal interviews.

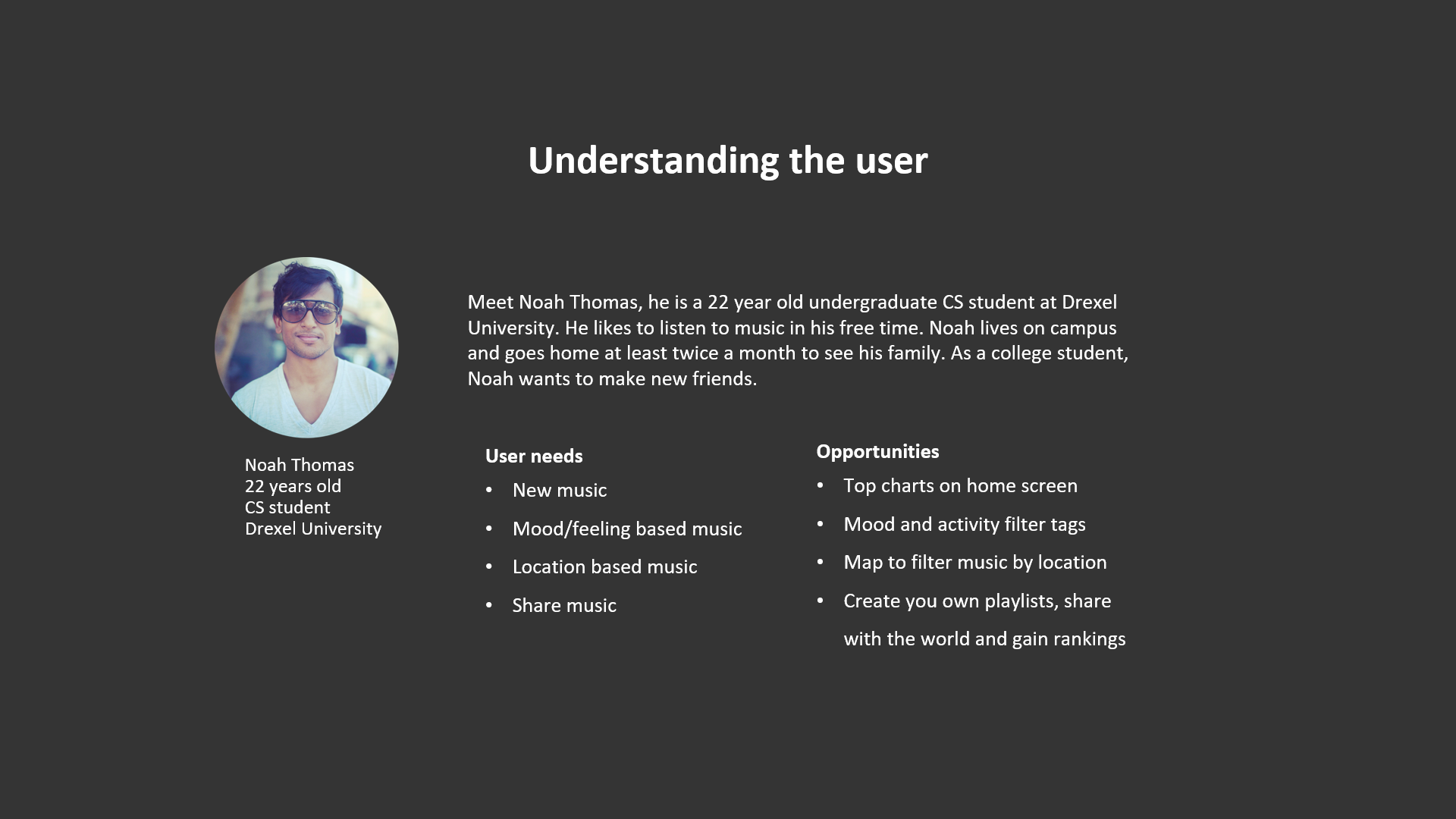

Understanding our Users

Identifying The Target Audience

Whether young or old, everyone listens to music but we wanted to focus on people who use online music streaming services Spotify, Apple Music, Pandora, etc. Research showed that people between the age of 14 to 39 (mostly students and young professionals) listen to music online so we set our targeted audience to be students and young professionals.

Understanding user’s relationships with music

Some of the key findings from our user research:

- People like sharing music with their friends

- People listen to music during long travels.

- People have memories of songs from particular places.

- People listen to music while doing something on the side, like exercising, studying, meditating, etc.

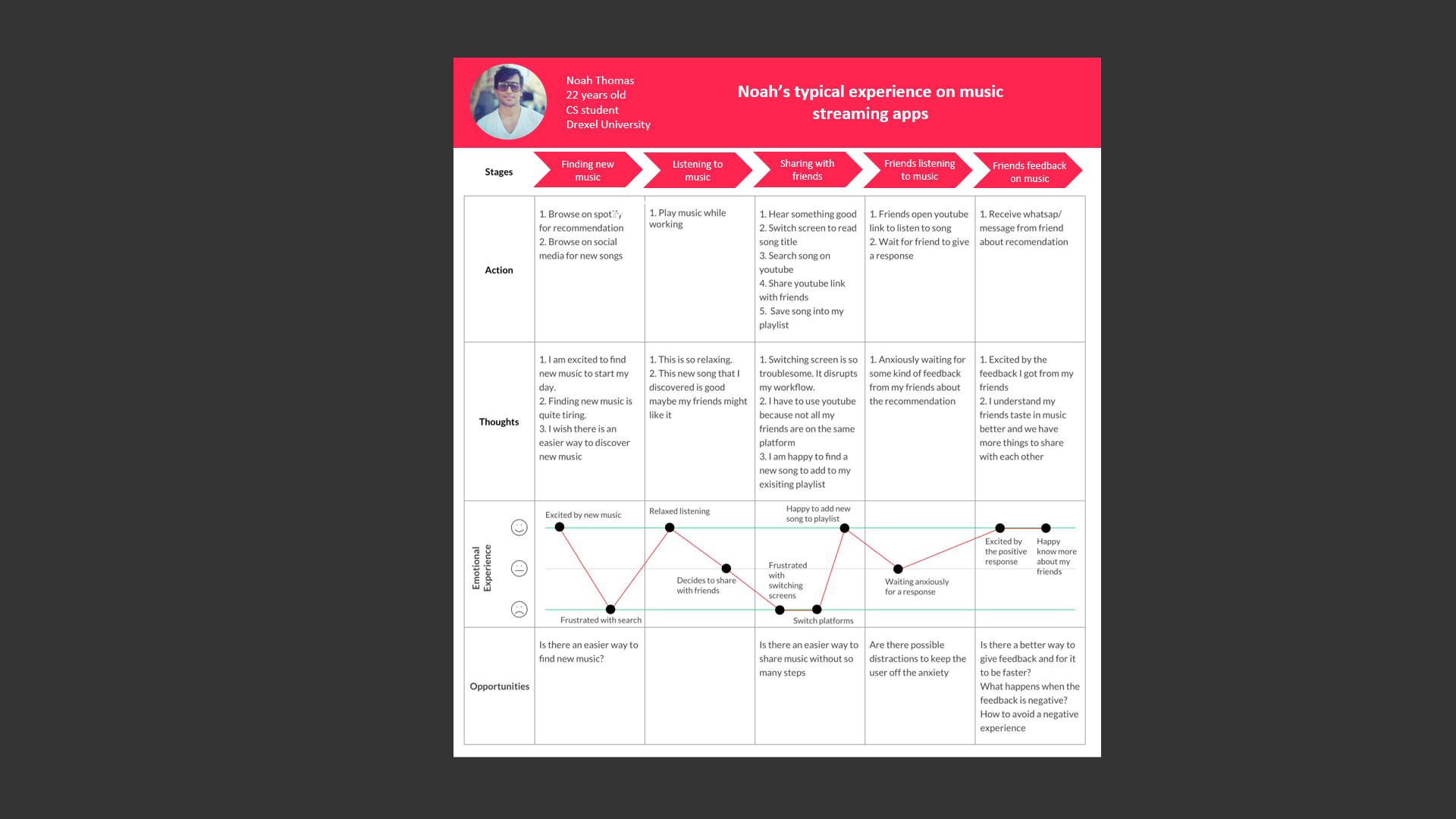

A typical user’s journey on current music streaming apps

After mapping out the journey of a typical music streaming app user, we found out that the playlist creation process is very long and boring, social music sharing is not very intuitive, and finding music specific to the location is very hard.

Sketching Out Ideas

What Kinds of Moods/Emotions Do People Experience While Listening to Music?

Studying moods in music and social contexts

While perceptions of color are somewhat subjective, there are some color effects that have universal meaning. Colors in the red area of the color spectrum are known as warm colors and include red, orange, and yellow. These warm colors evoke emotions ranging from feelings of warmth and comfort to feelings of anger and hostility.

Colors on the blue side of the spectrum are known as cool colors and include blue, purple, and green. These colors are often described as calm, but can also call to mind feelings of sadness or indifference.

View full research on classification of moods in music

View full research on music in social context

Understanding What People Do when They Listen to Music?

View full research on music and activity

We wanted to make sure that the preset activity tags we have are relatable for our users and help with boost their productivity and/or involvement with the activity they were performing so we created a list of the most things people do while streaming music online.

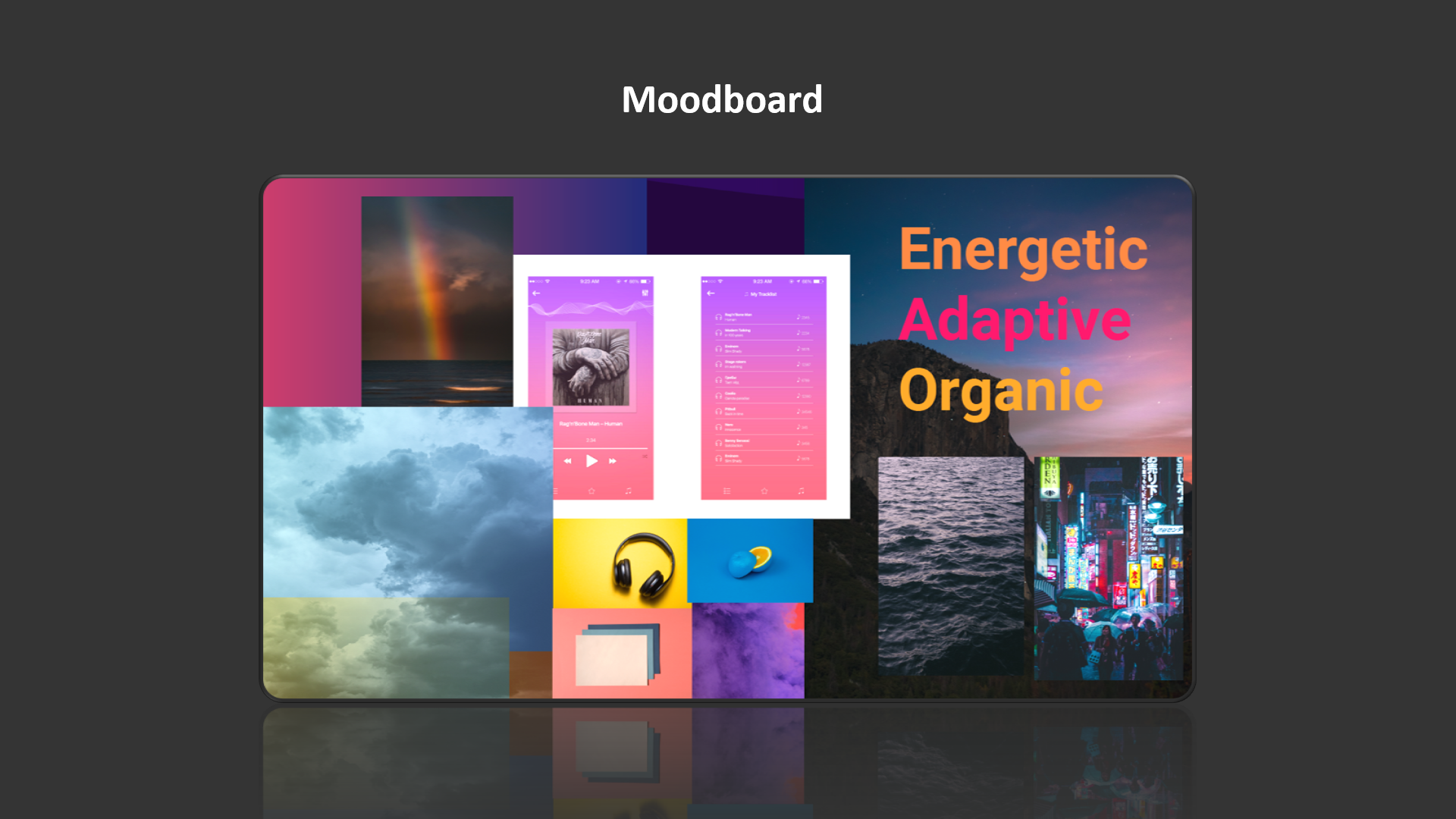

But How do we Visualize these Moods?

Studying the best ways to visually describe moods

After a lot of research and talking to designers, we found that the best way to visualize mood and emotions in music is by using color and motion

Thinking of a concept to bring color, motion, and moods all together.

Information Architecture

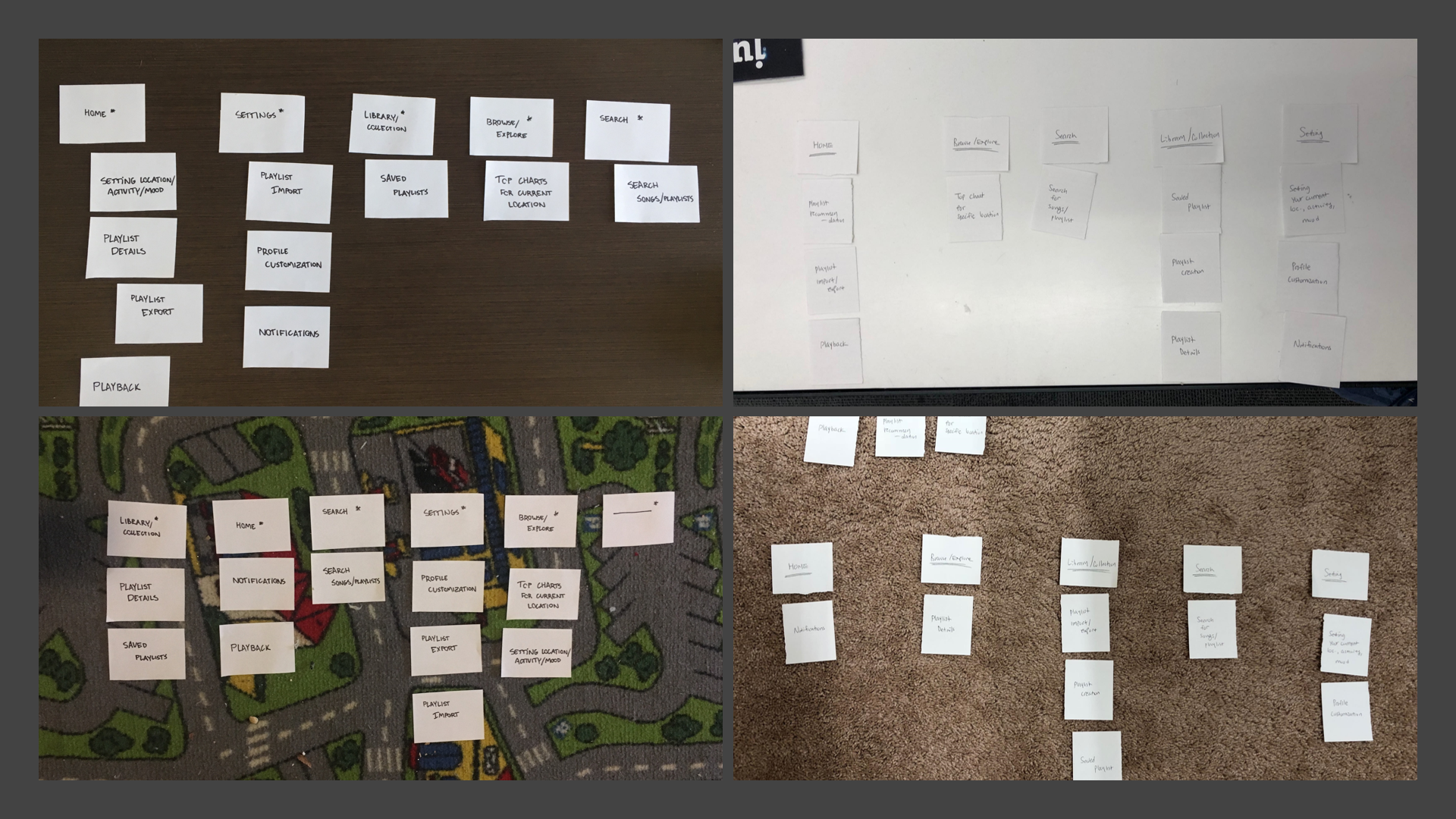

Card sort exercises

We conducted a few card sort exercises with the same participants we interviewed in the initial stages of research to get a better sense of our targeted usersí mental models with the user flow

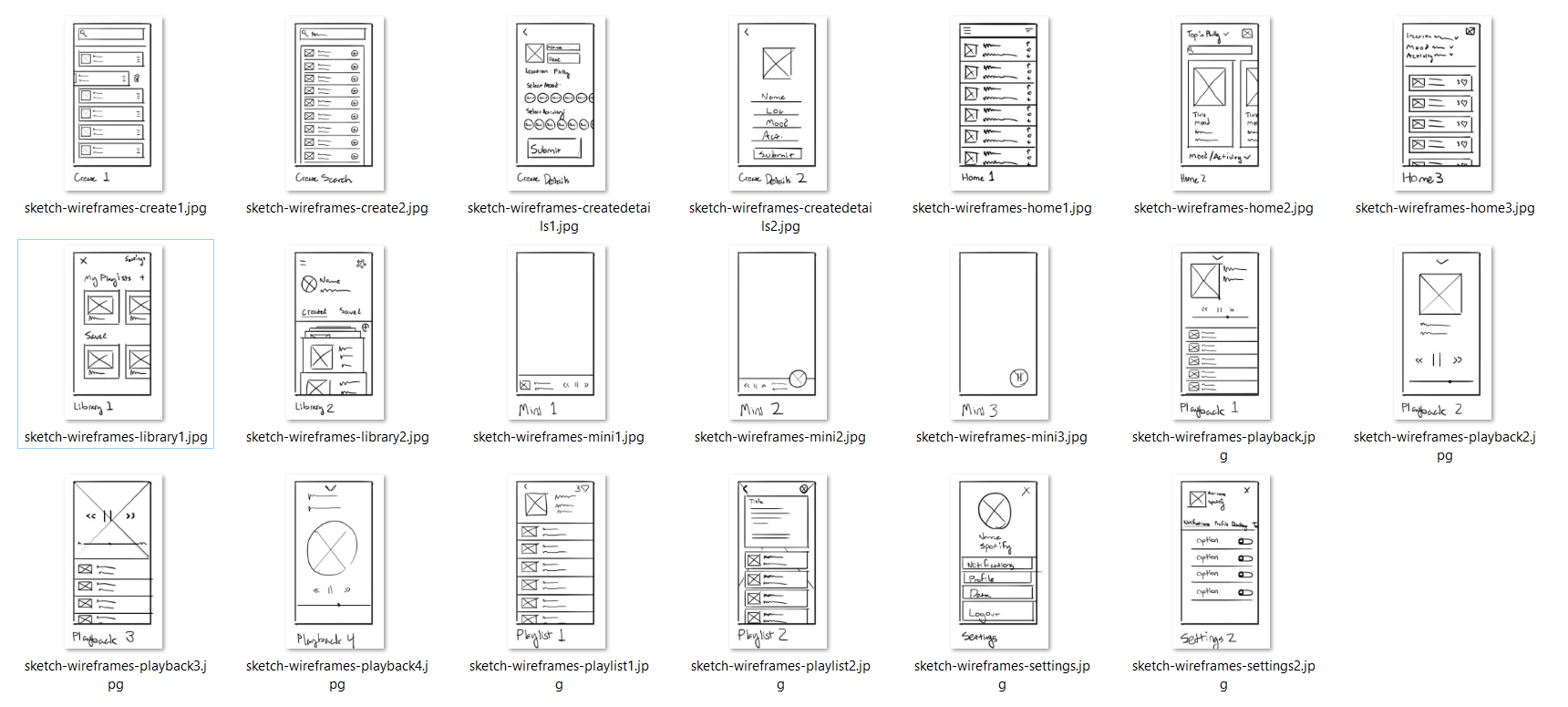

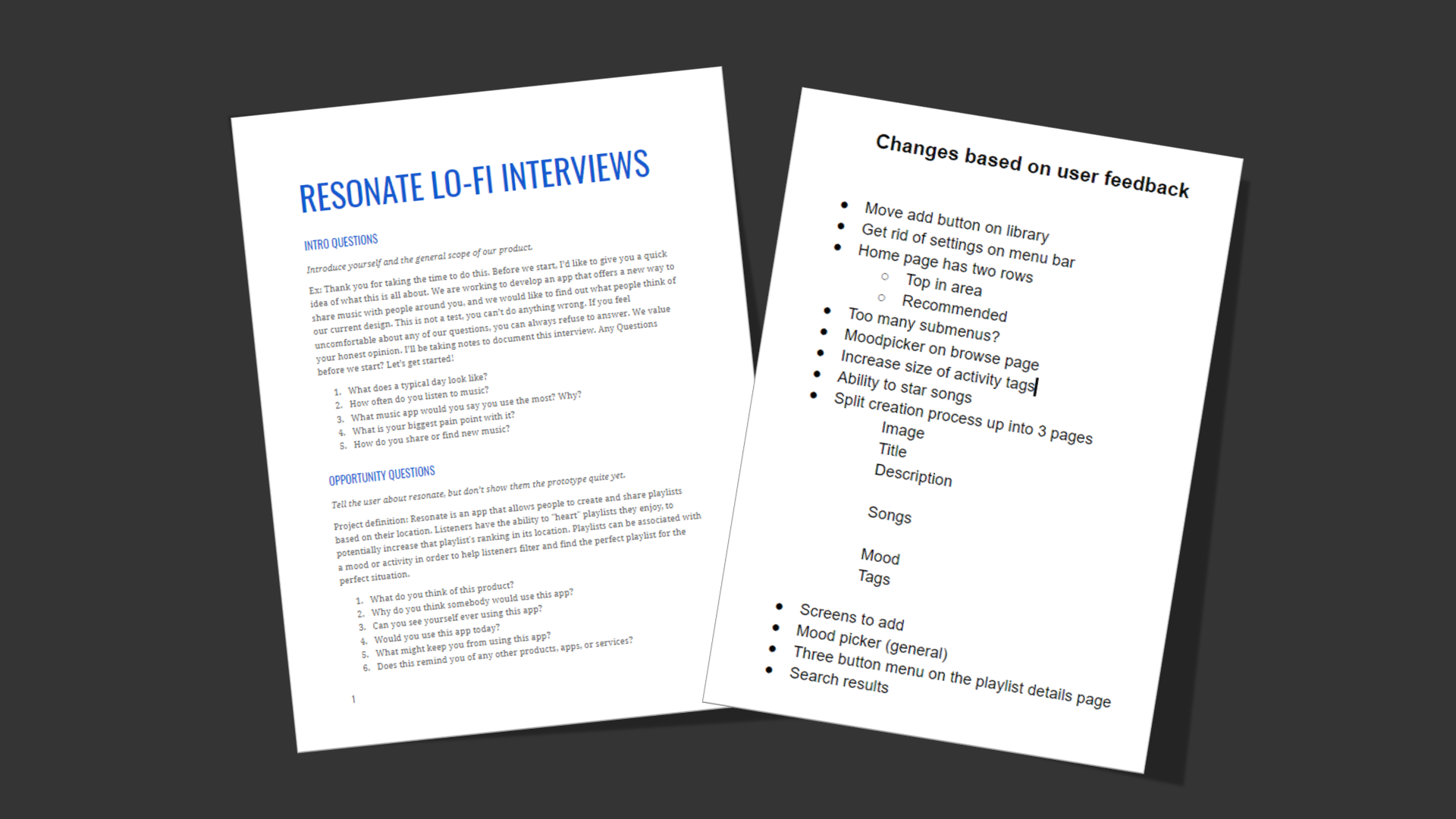

Converting Sketches to Low Fidelity Screens

Testing Low Fidelity Invision Prototype with Users

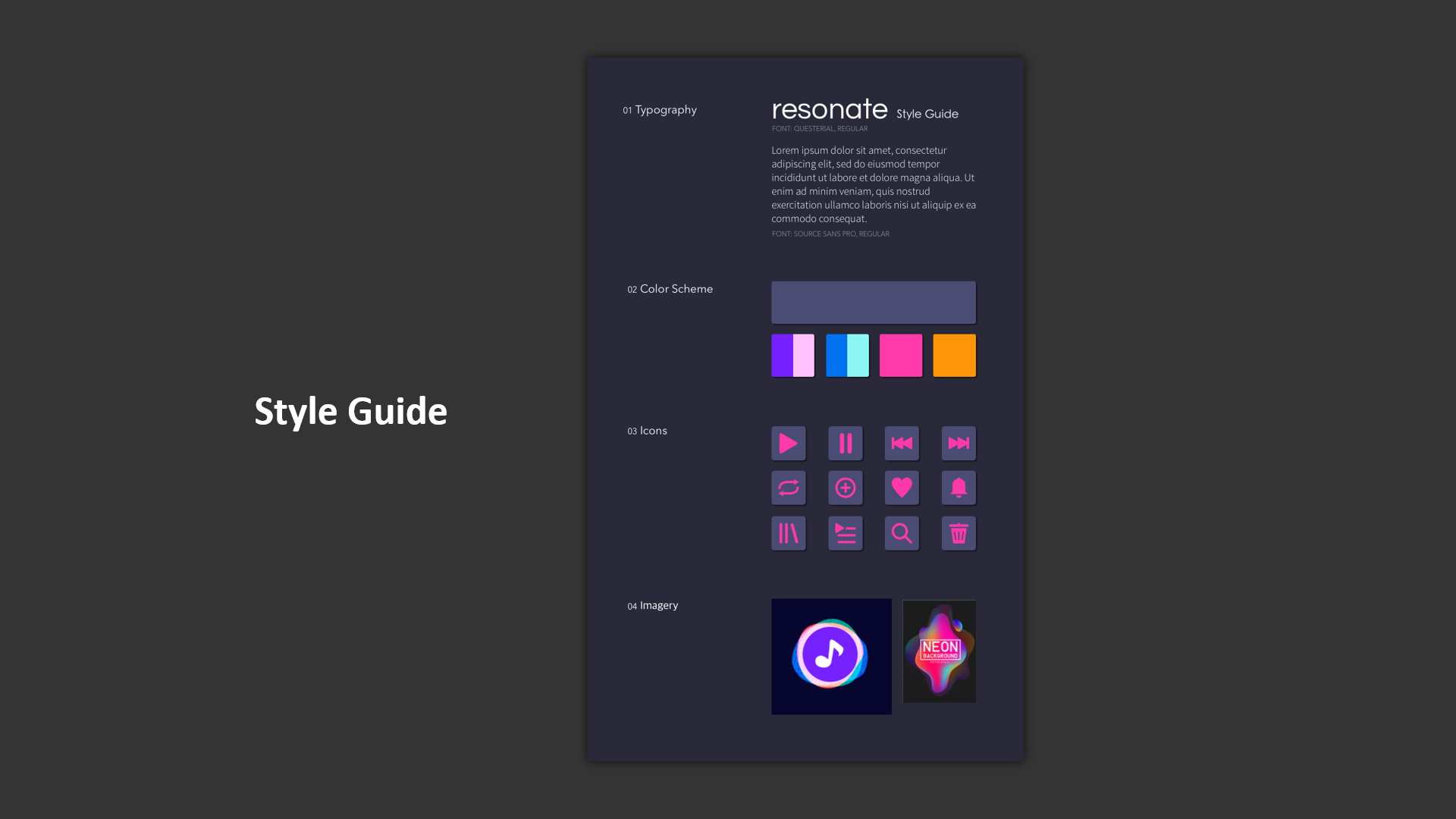

Tackling the Design Aspect

Final Design

Building the App

Development Structure

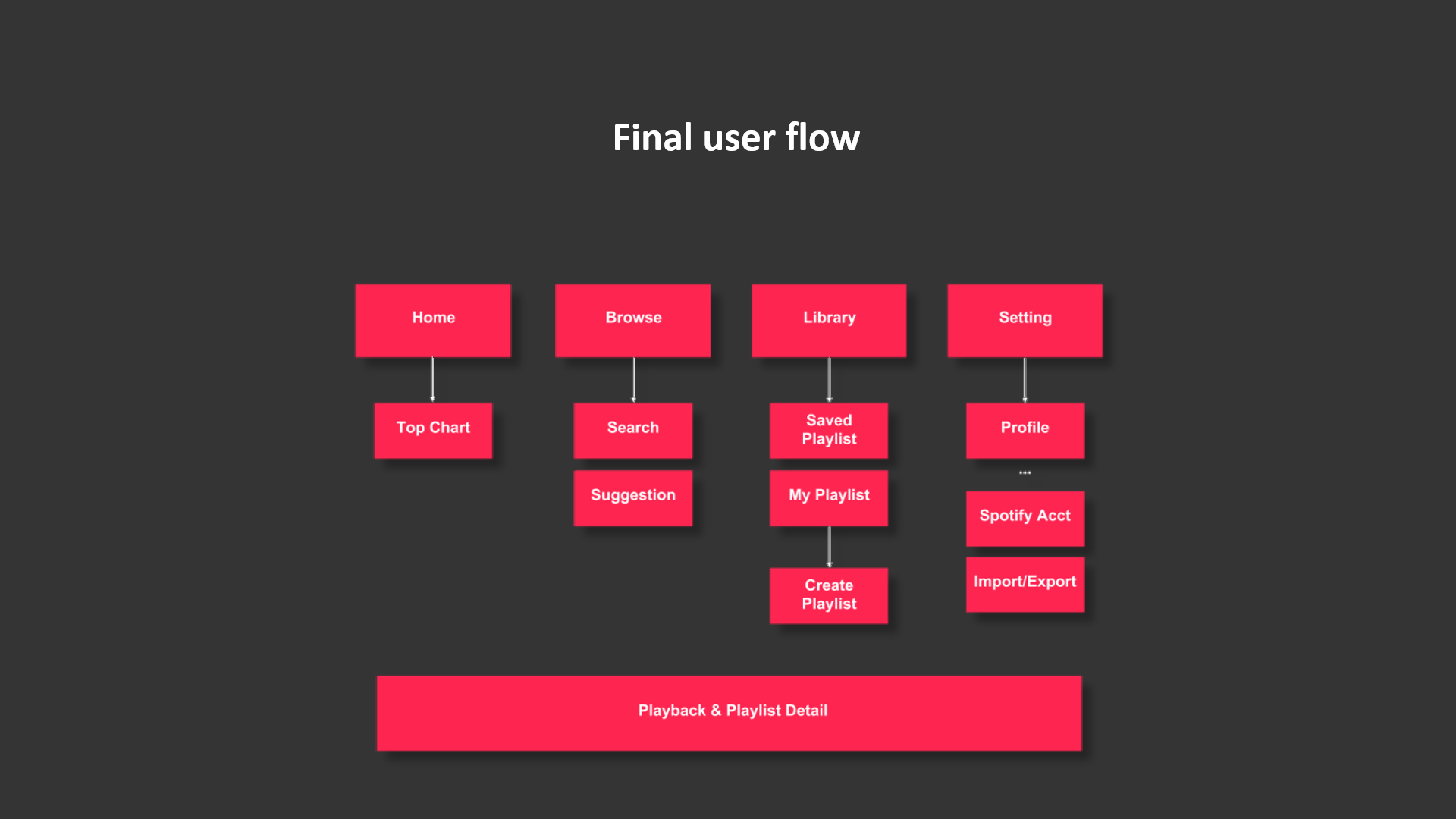

Development: To approach the development phase, we divided the whole process into tow main components: the react visual component being all the front end development components and the server component being all the back end components.

The react visual component was further broken down into six main components (see diagram below) including the settingsview (settings screen), createview (create a new playlist), browseview (create a new playlist), homeview (home screen and the mood picker overlay), playview (playlist details), and the playerview (playback screen). These six main components were also broken down into smaller components in order to achieve every minor detail and micro interactions.

The server component was broken down into three main components including the database (songs, playlists, artists, etc.), API integration (Google maps and Spotify), and recommendations (custom algorithm to provide users with recommended playlists).

Developing the components

The react visual component was further broken down into six main components (see diagram below) including the settingsview (settings screen), createview (create a new playlist), browseview (create a new playlist), homeview (home screen and the mood picker overlay), playview (playlist details), and the playerview (playback screen). These six main components were also broken down into smaller components in order to achieve every minor detail and micro interactions.

Front end: We used React Native to develop all the visual components of our app. We also used Redux to develop some of the complex micro interactions we have in our app.

Back end/Server: We used NodeJS and MongoDB for store our database full of songs, playlists, artist details and all other cool music stuff

API Integration: To create our location-based music feature, we used Google Maps API and customized it based on the overall design theme. Some other general music streaming features were developed with the help of Spotify API.