Project Overview

This research project is an international collaboration between Drs. Jichen Zhu (Digital Media, Drexel University) and Sebastian Risi (Digital Design, IT University of Copenhagen, Denmark).

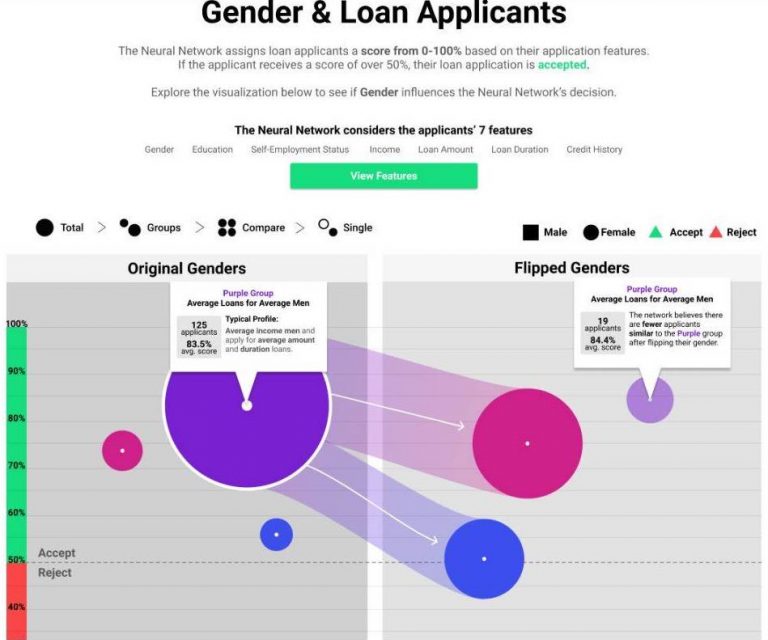

Artificial Intelligence (AI) methods are used in increasing range of aspects of our daily lives. As the adoption of these AI applications widens, the need for transparency and accountability becomes more pressing. Governments have started to require companies to be transparent about AI applications with social significance. For example, profiling models, a widely used method to model certain aspects of a person (e.g., financial creditworthiness), raised public concerns.

Currently, the general public relies on AI experts to discover these biases. However, this approach cannot easily scale up with the rapid adoption rate of AI applications. More important, technical experts may not be fully aware of the needs of the communities about which AI algorithms are making decisions. As a result, there is a recent call to empower non-experts to open the blackbox of AI and better understand its decision making process

In this project, we explore the use of interactive visualization for AI non-experts to explore a semantic Neural Network’s (NN) decisions, in the context of profiling models for loan applications, to reveal potential bias.

Sponsors

N/A

Publications

Chelsea M. Myers, Evan Freed, Luis Fernando Laris Pardo, Anushay Furqan, Sebastian Risi, Jichen Zhu, “Revealing Neural Network Bias to Non-Experts Through Interactive Counterfactual Examples,” arXiv preprint, arXiv:2001.02271